Problem set 7¶

Problem 1. Drunken sailors leaving a pub that is near a ditch¶

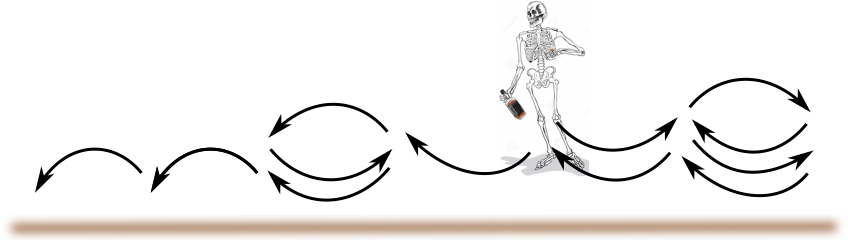

A random walk is sometimes described with a drunken sailor who takes a step and then forgets which way he was going and then takes another step. We start our sailors at $x=0$ (the pub). After $N$ steps we expect that the distribution of a bunch of sailors (all leaving the pub at the same time) and moving in 1 dimension is Gaussian in shape.

A normalized Gaussian probability distribution with mean $\mu=0$ and dispersion $\sigma$ is $$ p(x) = \frac{1}{\sqrt{2 \pi \sigma^2}} e^{-x^2/(2 \sigma^2)} $$ Here the dispersion (or standard deviation) $\sigma$ is the the square root of the variance. The variance of a probability distribution with zero mean is $$\sigma^2 = \int_{-\infty}^\infty p(x) x^2 dx $$

If the dispersion of a single step is $\sigma$ then the distribution of sailors after $N$ steps is well described by a probability distribution $$ p(x,N) = \frac{1}{\sqrt{2 \pi \sigma_N^2}} e^{-x^2/(2 \sigma_N^2)} $$ with $\sigma_N = \sqrt{N}\sigma$.

Below I illustrate a code that does a 1-dimensional random walk using a sum of Gaussian distributions. The resulting distribution of drunk sailors matches the predicted Gaussian distribution.

The Central Limit Theorem states that the sum of a bunch of independent variables should (in most cases)

be well described by a normal (Gaussian) distribution.

See https://en.wikipedia.org/wiki/Central_limit_theorem

So as long as each step is generated by a well behaved probability distribution, it does not matter what we use to generate it. In other words we should get the same behavior using steps generated from a normal distribution as we would if we had flipped a coin at each step, taking a step of equal length in either direction. Here well behaved means with finite mean and variance.

# example making a random walk and showing that the distribution after a number of

# steps is a gaussian

import numpy as np

%matplotlib inline

import matplotlib.pyplot as plt

from scipy import stats # has lots of distribution functions in it

from math import erfc # complimentary error function

# randomly walk nsteps and return the x value

# starting at x=0

# each step has zero mean and a variance of 1

def walkn(nsteps): # random walk using a normal distribution for step sizes

r = stats.norm.rvs(size=nsteps) # normal distribution mean=0 variance=1

# r is a vector values randomly generated with a normal distribution

return sum(r) # the sum of the entire vector!

# walk npart numbers of particles (or sailors) nsteps and return a vector of x positions

# the function that gives us a randomly generated position is walkn

def npart_walkn(npart,nsteps):

xvec = np.zeros(0)

for i in range(npart):

x = walkn(nsteps) # a single random walk value

xvec = np.append(xvec,x) # append each random walk to the vector

return xvec

nsteps = 100 # number of steps

npart = 1000 # number of particles (sailors) to let walk around

# fill a vector with npart walkers each walking nsteps

xvec = npart_walkn(npart,nsteps)

# this vector contains final positions of each walker

# plot the histogram, i.e., measured distribution of final positions

# after n steps of random walking

n, bins, patches =plt.hist(xvec,bins=20,density=True)

# this retuns as n the number of values counted, bins is the x values of the bins,

# patches is for plotting the histogram

plt.xlabel("x",fontsize=22)

plt.ylabel("p(x)",fontsize=22) # probability!

# a gaussian probability density distribution, this is a function!

mygaus = stats.norm(0.0, np.sqrt(nsteps)) # should scale with sqrt(nsteps)

xlin = np.linspace(1.1*min(bins),1.1*max(bins),100)

y = mygaus.pdf(xlin) # evaluate the function

plt.plot(xlin,y,"k", lw=3 ) #plot the expected density distribution as a black line

After 100 steps of random walk 1000 particles show a gaussian distribution with width predicted using the square root of the number of steps (and their sizes).

# check that if we increase the number of particles we get the same answer

nsteps = 400 # number of steps

npart = 4000 # number of particles to let walk around

xvec = npart_walkn(npart,nsteps)

# plot the histogram, i.e., measured distribution of final positions after n steps of random walking around

n, bins, patches =plt.hist(xvec,bins=30,density=True,alpha=0.5)

plt.xlabel("x",fontsize=22)

plt.ylabel("p(x)",fontsize=22)

mygaus = stats.norm(0.0, np.sqrt(nsteps)) # should scale with sqrt(nsteps)

xlin = np.linspace(1.1*min(bins),1.1*max(bins),100)

y = mygaus.pdf(xlin)

plt.plot(xlin,y,"g", lw=3 ) #plot the expected density distribution as a black line

After 400 steps of random walk 4000 particles show a gaussian distribution with width predicted using the square root of the number of steps (and their sizes). This picture should look even better than the last one as we ran more particles and steps, and that's good.

# notes on the stats package

n=0

stats.norm.rvs(size=n) # returns a vector n long that has random values from a normal distribution

stats.norm.rvs() # returns a single random number (generated from a normal distribution)

func = stats.norm.pdf # a probability density function that is normal!

# you can call this with func(x)

mean=0; std=1

stats.norm(mean, std).rvs() # uses a normal distribution with mean and standard deviation

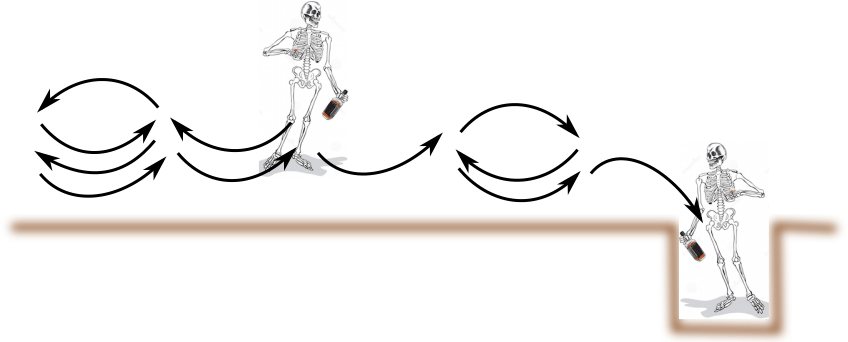

Problem 1 continued. Random walk with a ditch¶

If there is a post at $x_p>0$ the fraction of sailors currently past the post after $N$ steps of size chosen with dispersion $\sigma$ is

\begin{align*} f(x_p,N) &= \int_{x_p}^\infty \frac{1}{\sqrt{2 \pi \sigma_N^2}} e^{-x^2/(2 \sigma_N^2)} dx \\ &= \int_{x_p/(\sqrt{2}\sigma_N)}^\infty \frac{1}{\sqrt{\pi}}e^{-z^2} dz\\ &= \frac{1}{2} {\rm erfc}\left( \frac{x_p}{\sqrt{2}\sigma_N} \right) \end{align*}with $\sigma_N = \sqrt{N} \sigma$. This integral can be visualized as the area under the probability function past $x_p$.

The complimentary error function is defined as $$ {\rm erfc}(y) \equiv \frac{2}{\pi} \int_y^\infty e^{-t^2} dt $$

We now place a ditch at a particular $x$

location, $x_{ditch}$ which we take to be $>0$.

If a drunken sailor steps into the ditch then he can't get out and sleeps there until morning.

- Numerically compute a probability distribution (as a function of $x$) of remaining sailors that are not in the ditch after a number of steps $N$. Show that the probability distribution is not much different than a Gaussian distribution as long as $ \sqrt{N}\sigma \ll x_{ditch} $, but as $N$ increases, the distribution becomes lopsided. Make sure that your distribution is normalized (integrates to 1).

- Compare your probability distribution computed using number of particles, $N_{particles}$, with that computed using twice as many particles. Illustrate that your results are not strongly dependent on the number of particles with which you are computing random walk trajectories.

- As a function of of numbers of steps $N$, compute numerically the fraction of sailors left in the ditch. You should see that as $N$ increases, more sailors are left in the ditch. We can *estimate* the fraction of drunk sailors that have fallen into the ditch by integrating the tail of the probability density distribution in the absence of the ditch. After $N$ steps the fraction of sailors in the ditch is then $$ f(x_{ditch},N ) \sim \frac{1}{2} {\rm erfc}\left( \frac{x_{ditch}}{\sqrt{2}\sigma_N} \right)$$ The erfc function is available as *math.erfc()*

- As a function of the number of steps $N$, compare your numerically measured fraction of sailors in the ditch to the function $f(x_{ditch}, N )$ computed with the complimentary error function. Show that the analytical estimate is not a good approximation. In fact, many more sailors wind up in the ditch! Explain why.

For N=60 and $x_{ditch}=10$ I find that about twice as many sailors wind up in the ditch as incorrectly predicted with this formula.

Physicists have exploited this analogy to account for the trapping rates of atoms in a potential well due to thermal motions.

Problem 2. Levy Flights and heavy tailed distributions¶

An example of a probability distribution that is heavy tailed is the Cauchy distribution. See https://en.wikipedia.org/wiki/Cauchy_distribution

$$f(x) = \frac{1}{\pi} \frac{1}{x^2+ 1} $$The maximum of this function is $1/\pi$ and the full width half max can be found by solving for $x$ with $\frac{1}{{x^2} + 1} = 1/2$ (and then multiplying by 2). The full width half max (FWHM) is 2.

The full width half-max (FWHM = full width half max) can be controlled with a parameter $\gamma$ giving a probability distribution $$f(y) = \frac{1}{\pi\gamma} \frac{\gamma^2}{y^2+ \gamma^2} $$ The maximum value of this Cauchy distribution is $ \frac{1}{\pi\gamma}$ and its FWHM $=2 \gamma$.

The Cauchy distribution has a nice property that a sum of random variables drawn from Cauchy distributions is also

Cauchy, but with a larger FWHM.

However, the Cauchy distribution has tails that are pathological in the sense that the distribution

has infinite variance. It is an example of a distribution that violates the Central Limit theorem.

To qualitatively illustrate the implications, we show a 2-dimensional random walk generated with steps taken from a Cauchy distribution and compare it to that generated from a random walk taken with steps generated from a normal distribution.

To generate a random walk on the $x,y$ plane, we

generate an angle $\theta$ using a uniforma distribution in $[0,2\pi)$.

The angle is used to set the direction of a step.

We generate a distance $d$ for the step using

either the Cauchy or normal probability distributions.

The walker updates its $x,y$ position with

$x^{n+1} = x^n + d \cos \theta$

$y^{n+1} = y^n + d \sin \theta$

Here $(x^n,y^n)$ is the position at time step $n$.

This gives a randomly generated walk on the $x,y$ plane.

# example comparing 2-Dimensional random walks with normal and Cauchy distribution steps

# fill some vectors with random walks using Gaussian and using Cauchy distributions

# for sizes of steps

# 2d random walks, this routine returns 4 vectors, x,y for each random walk

# angles for each step are chosen from uniform distribution

# but distances moved in each step are chosen from normal or Cauchy distributions

# nsteps is the number of steps taken for both random walks

# mgamma is the gamma parameter for the Cauchy distribution

def fillvecs(nsteps,mgamma):

xCvec=np.zeros(0); yCvec=np.zeros(0) # vectors of positions for Cauchy steps

xC=0.0; yC=0.0; # initial conditions for the Cauchy walk

xCvec = np.append(xCvec,xC); yCvec = np.append(yCvec,yC)

# append initial conditions

xNvec=np.zeros(0); yNvec=np.zeros(0) # vectors of positions for normal steps

xN=0.0; yN=0.0 # initial conditions for the normal walk

xNvec = np.append(xNvec,xN); yNvec = np.append(yNvec,yN)

# append initial conditions

for i in range(nsteps):

theta1 = stats.uniform.rvs()*2.0*np.pi #uniformly distributed angles

rC = stats.cauchy.rvs(scale=mgamma) #cauchy distn step size

xC += rC*np.cos(theta1) # update coordinate positions

yC += rC*np.sin(theta1)

xCvec = np.append(xCvec,xC); yCvec = np.append(yCvec,yC) #Cauchy

rN = stats.norm.rvs() # normal distn step size

theta2 = stats.uniform.rvs()*2.0*np.pi

xN += rN*np.cos(theta2) #update coordinate positions

yN += rN*np.sin(theta2)

xNvec = np.append(xNvec,xN); yNvec = np.append(yNvec,yN) #Normal

return xCvec,yCvec,xNvec,yNvec # return the 4 vectors

The routines stats.cauchy.rvs() and stats.norm.rvs() can return a vector of randomly generated numbers whereas stats.norm.pdf and stats.cauchy.pdf are probability density functions.

mgamma=0.05 # gamma parameter for the Cauchy distribution

xC,yC,xN,yN = fillvecs(230,0.1) # get our random walk trajectories

# and plot them

plt.plot(xC,yC,'ro',alpha=0.5, label=r"Cauchy, $\gamma$=0.05")

plt.plot(xC,yC,'k-')

plt.plot(xN,yN,'bo',alpha=0.5,label="Normal")

plt.plot(xN,yN,'k-')

plt.legend(loc='best', frameon=False)

plt.axis('equal'); # set aspect ratio

We notice that the normal distribution random walk looks very different than the Cauchy one. The Cauchy one has intermittent clumps and large intermediate steps due to its tail. The two walks look very different! Here I have reduced the size of the Cauchy steps, so we could get the two walks nicely in the same window!

# do it again!

xC,yC,xN,yN = fillvecs(500,0.05) # get our random walk trajectories

# and plot them

plt.plot(xC,yC,'ro', alpha=0.5, label=r"Cauchy $\gamma=0.05$")

plt.plot(xC,yC,'k-')

plt.plot(xN,yN,'bo',alpha = 0.5, label="Normal")

plt.plot(xN,yN,'k-')

plt.legend(loc='best', frameon=False)

plt.axis('equal');

Sum of Normal and Sum of Cauchy¶

For $x,y$ both drawn from a Gaussian distribution with standard deviations $\sigma_x, \sigma_y$, and $z=x+y$, the standard deviation $\sigma_z = \sqrt{\sigma_x^2 + \sigma_y^2}$. The distribution of $z$ is also Gaussian.

For $x,y$ both drawn from a Cauchy distribution with HWHMs (half width half max) $\gamma_x, \gamma_y$, and $z=x+y$, the HWHM $\gamma_z = \gamma_x + \gamma_y$. The distribution of $z$ is also Cauchy.

Problem 2 continued¶

Consider a random variable $z = x+ y$ that is a sum of two randomly generated variables. We take $x$ generated with a normal distribution and $y$ generated with a Cauchy distribution.

In other words let $x$ be generated with a normal probability distribution $$ p(x) = \frac{1}{\sqrt{2 \pi }} e^{-x^2/2 } $$ and $y$ be generated with a Cauchy distribution with $$f(y) = \frac{1}{\pi\gamma} \frac{\gamma^2}{y^2+ \gamma^2} $$

We do a random walk where each step is given by $z$ and is the sum of a randomly generated $x$ and a randomly generated $y$. This is a 1-dimensional random walk.

Write a 1 dimensional random walk routine where each step is a sum of a step generated from a normal distribution and one from a Cauchy distribution.

To adjust the size of the Cauchy step, you can vary the parameter $\gamma$.

By running random walks for a bunch of particles you can look at the distributions after a number of steps.

You may need to limit the range of the histograms when you plot them, for example with

n, bins, patches =plt.hist(xvec,bins=20,density=True, range=[-50,50])- Show that after a while (a number of steps $N_{steps}$) the resulting distribution of locations has tails like the Cauchy distribution, and this is true even if you take smaller Cauchy steps (FWHM) (with $\gamma<1$) than normal steps at each time. At your chosen value of $\gamma$, how many steps does it take before the distribution of $z$ values for $N_{particles}$ resembles a Cauchy distribution?

Even if there are weak heavy tails, they will dominate the probablity distribution on long timescales.

At timestep $N$, the mean of the distribution of $N_{particles}$ is estimated as

$$ \mu_{N} = \frac{1}{N_{particles}} \sum_{i=1}^{N_{particles}} z_{i,N}$$

where $z_{i,N}$ is the position of the $i$-th particle after taking

$N$ steps in the walk.

The variance can be estimated as

$$\sigma_{N}^2 = \frac{1}{N_{particles}-1} \sum_{i=0}^{N_{particles}} (z_{i,N} - \mu_{N})^2 $$

Useful routines are numpy.var() or numpy.std() as they will compute $\sigma_N$ for you from an array of values!

- Compute the standard deviation $\sigma(N)$, (the sqrt of the variance $\sigma^2(N)$) of the particle ensemble at each time step $N$ and show that the estimate increases with increasing numbers of steps.

I recommend plotting $\log \sigma(N)$ vs $\log N$. For steps of the normal distribution alone (with no additional Cauchy steps) check that $\sigma(N) \approx \sqrt{N}$. Then plot $\sigma(N)$ for a walk that includes the additional small Cauchy steps (like with $\gamma=0.005$) and show that $\sigma(N)$ increases more rapidly than a sqrt dependence.

Relation to Diffusion¶

With the Cauchy distribution, every once in a while a particle every takes a huge step and these dominate the computation of the standard deviation. Only on short times (few steps) and with extremely small levels of the Cauchy steps would we see sqrt like behavior in the standard deviation as a function of the number of steps.

For a random walk generated with a Gaussian distribution with mean $\mu=0$, the standard deviation is well behaved and increases with the square root of the number of steps taken. A concentration of such walkers would obey the diffusion equation

$$\frac{\partial\rho}{\partial t} = D \left( \frac{\partial^2 \rho}{\partial x^2} + \frac{\partial^2 \rho}{\partial y^2} \right ) $$

with diffusion coefficient dependent on the time between steps and the variance of the Gaussian distribution.

A concentration of random walkers that move with steps drawn with the Cauchy distribution will not obey the diffusion equation.

This is related to what is called anomalous diffusion and Levy flights. See https://en.wikipedia.org/wiki/Anomalous_diffusion and https://en.wikipedia.org/wiki/L%C3%A9vy_flight